XGB

2025年9月24日

15:13

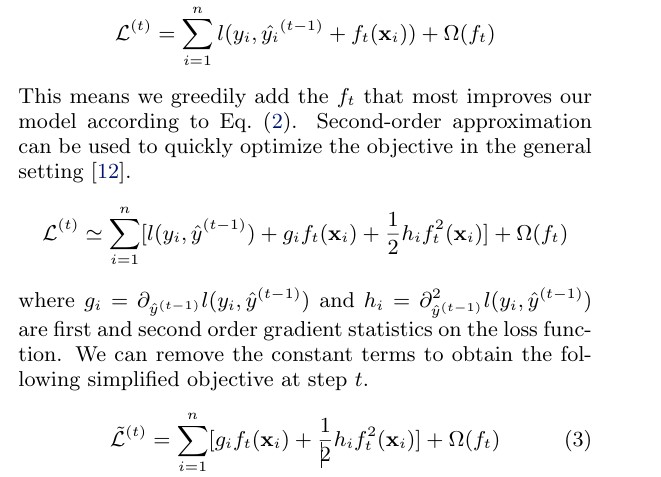

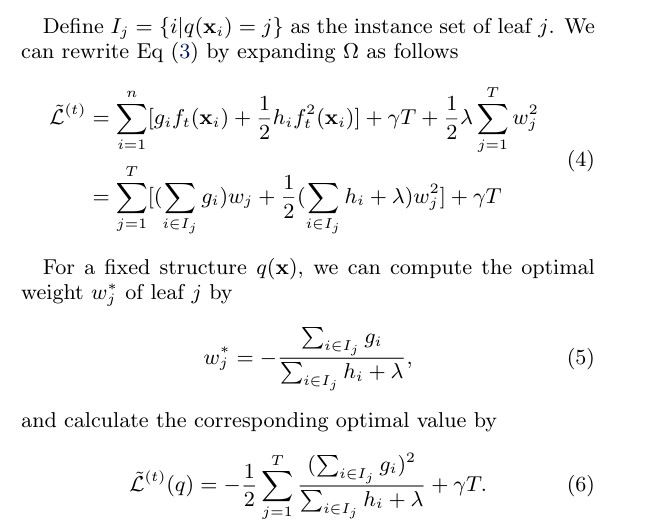

xgboost different from gbdt is, instead of find the regression tree structure first and then calculate the leaf nodes value c_{tj},we solve both two parts at one time.

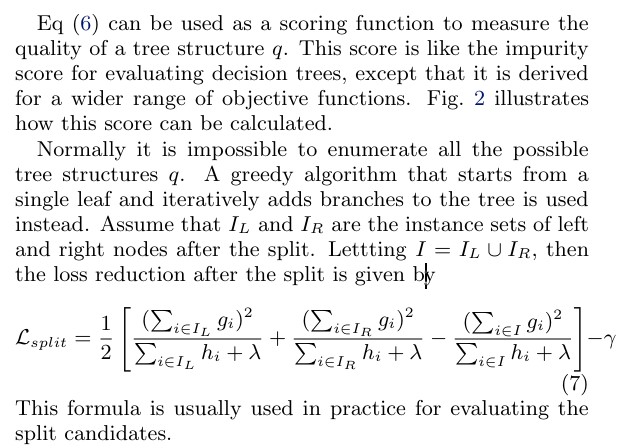

- XGB通过将树y'用其叶子节点的输出值表示,巧妙地将叶子节点的输出值与损失函数关联起来(先假设树模型已经确定,然后用贪婪方式,Normally it is impossible to enumerate all the possible tree structures q. A greedy algorithm that starts from a single leaf and iteratively adds branches to the tree is used instead);

- 然后,通过对损失函数在第t棵树y'_t处进行二阶泰勒展开,构建了一个一元二次方程,巧妙地确保了叶子节点的输出值就是让损失函数最小的那个值。

分裂点寻找方法:

- Exact greedy 方法

对特征进行预排序,enumerates over all the possible splits on all the features.

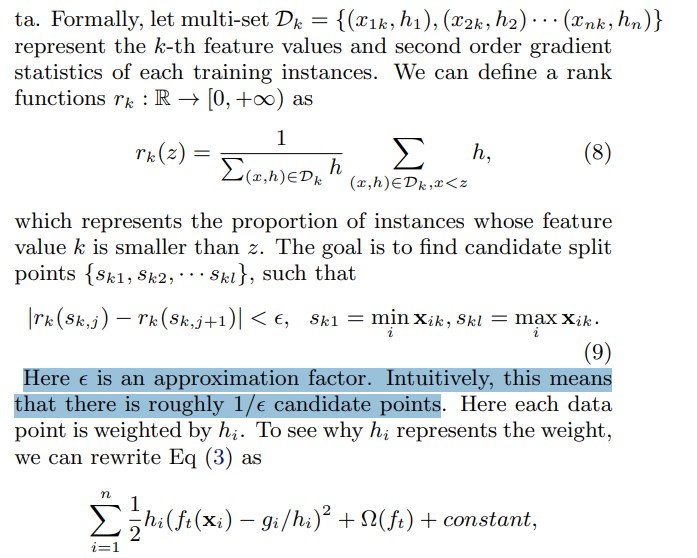

- 近似方法,对特征进行分桶,离散化,分桶的方法用加权分位数,权重为样本的二阶导,它保证每个桶(bucket)里样本的权重和(即二阶梯度之和)是大致相等的。

这个方法有2个变体, 一种是在每次构建树之前对特征进行分桶,一种是在每次节点分裂之后对特征进行分桶。第2种变体更精确 ,但费时。

There are two variants of the algorithm, depending on when the proposal is given. The global variant proposes all the candidate splits during the initial phase of tree construction, and uses the same proposals for split finding at all levels. The local variant re-proposes after each split.